Set up online evaluations

Before diving into this content, it might be helpful to read the following:

Online evaluations is a powerful LangSmith feature that allows you to run an LLM-as-a-judge evaluator on a set of your production traces. They are implemented as a possible action in an automation rule.

Currently, we provide support for specifying a prompt template, a model, and a set of criteria to evaluate the runs on.

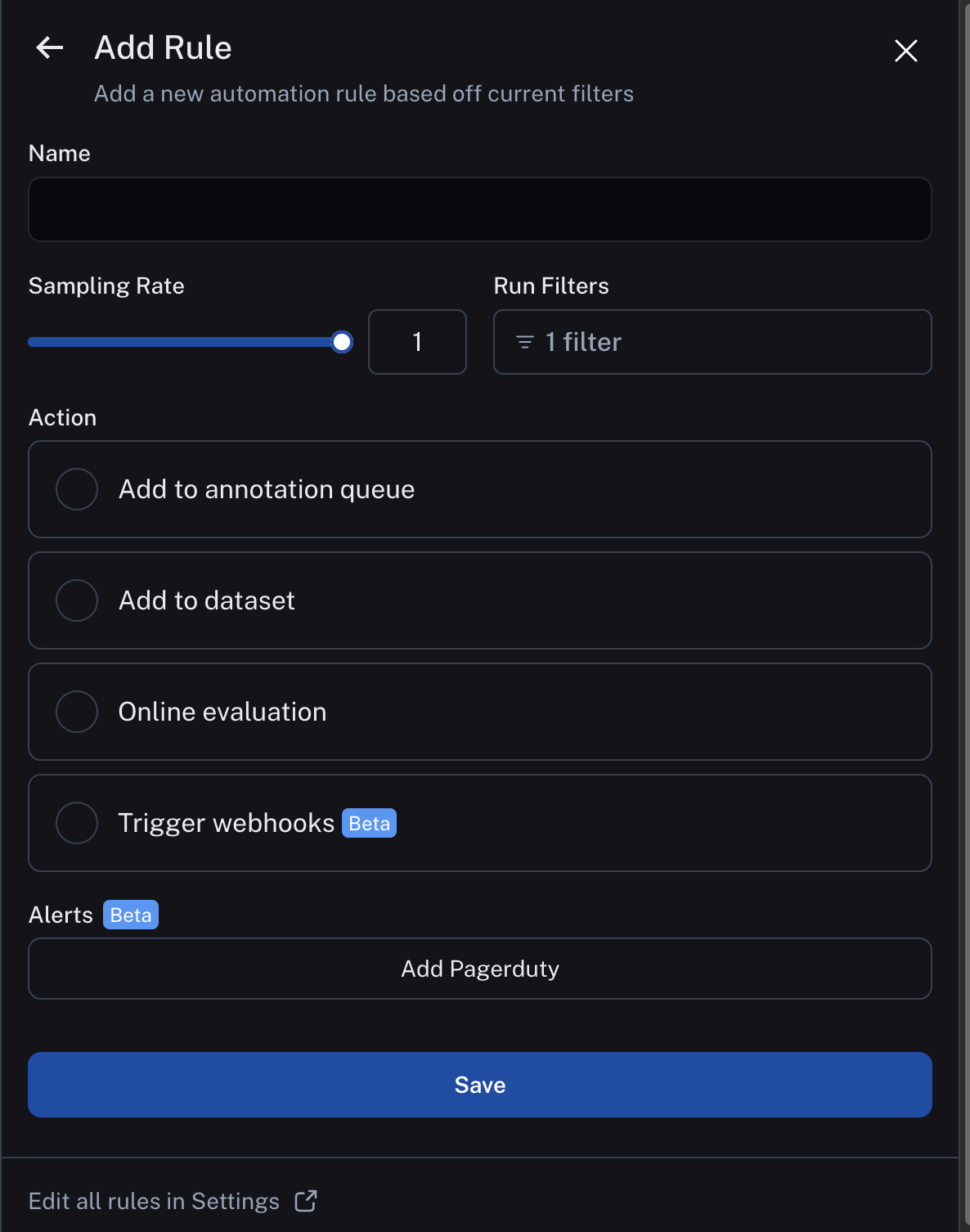

After entering rules setup, you can select Online Evaluation from the list of possible actions:

Configure online evaluations

When selection Online Evaluation as an action in an automation, you are presented with a panel from which you can configure online evaluation.

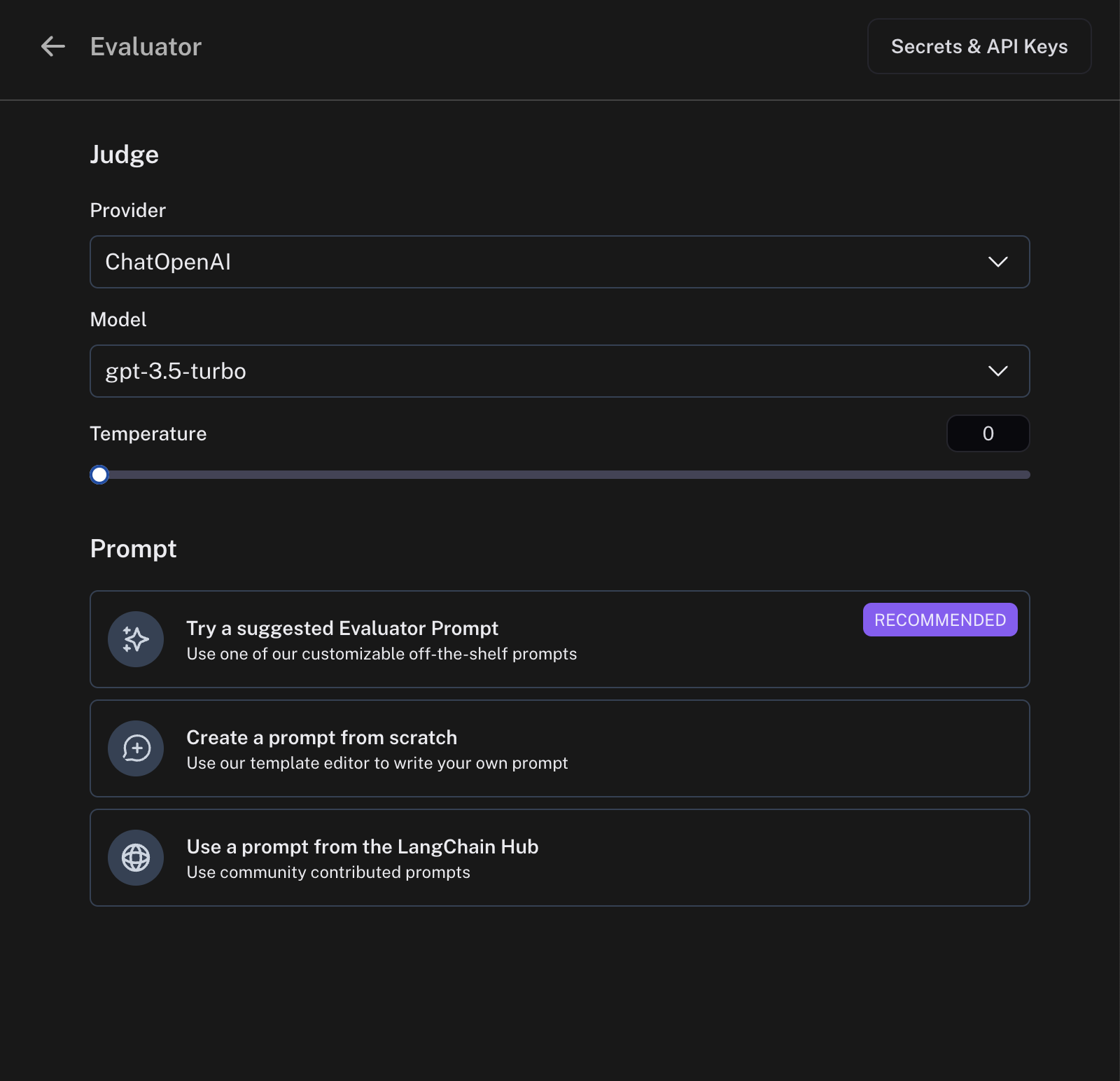

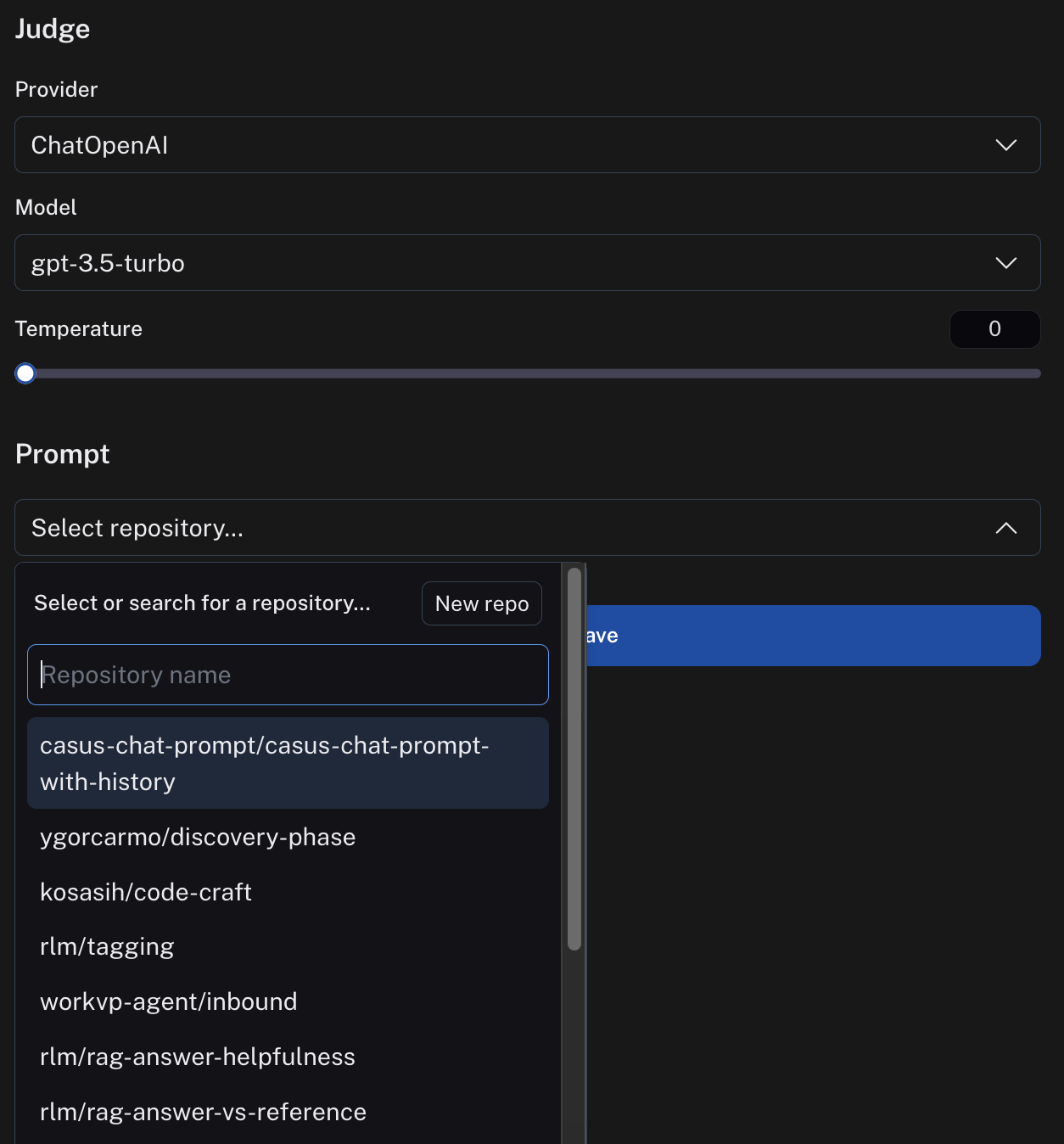

Model

You can choose any model available in the dropdown. Currently, we support OpenAI, AzureOpenAI, and models hosted on Fireworks.

Prompt template

For the prompt template, you can select a suggested evaluator prompt, create a new prompt, or choose a prompt from the LangChain Hub.

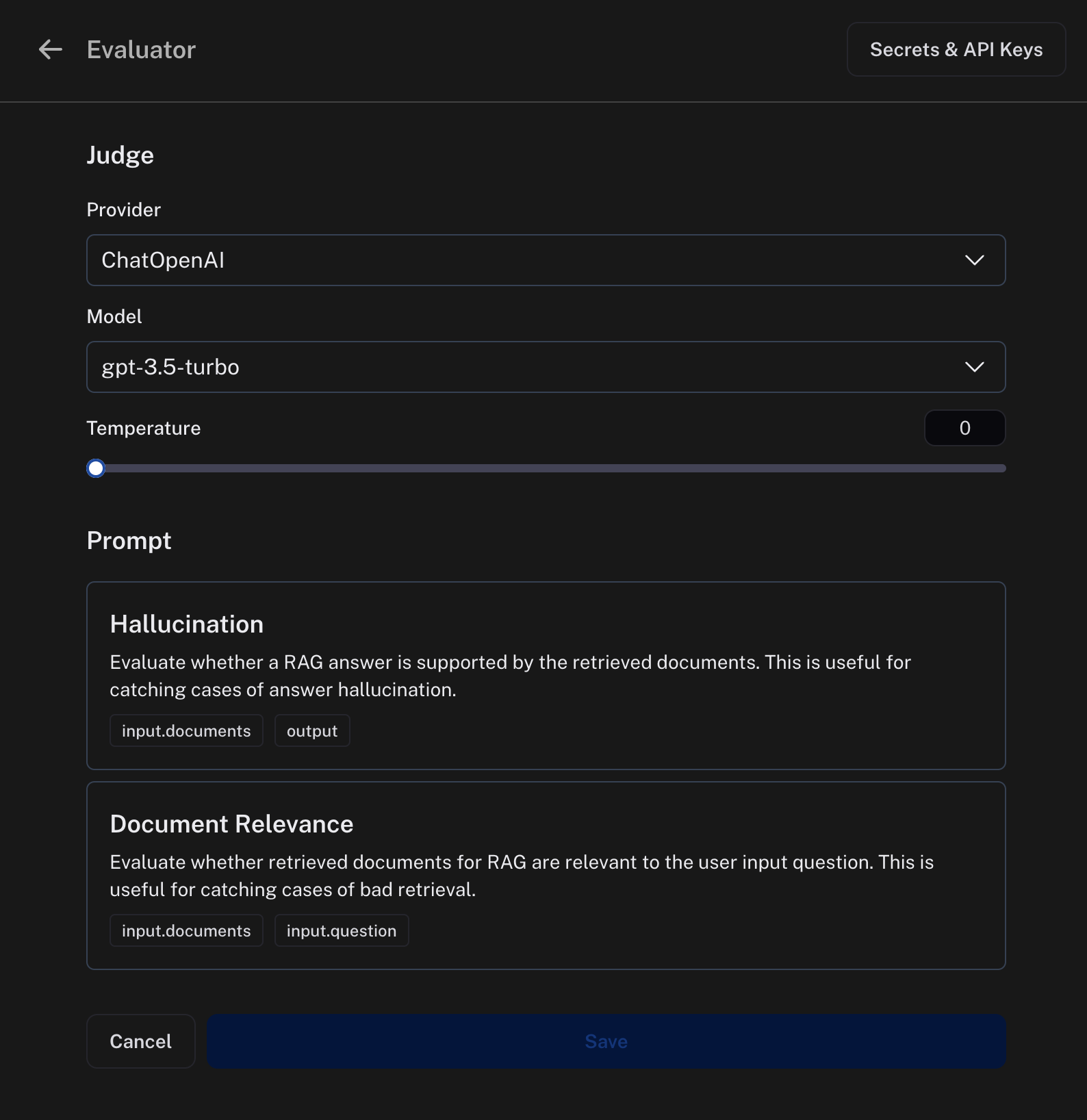

Suggested evaluator prompts

We provide a list of pre-existing prompts that cater to common evaluator use cases. Once you select a prompt, you can customize it in the prompt editor to work with your run schema.

Create your own prompt

We also provide a prompt editor where you can create your own prompt.

We support two template formats:

- mustache: anything in

{{...}}is treated as a mustache variable. Mustache supports more robust templating, including nesting variables. See the mustache documentation for more information. - f-string: anything in

{...}is treated as an f-string variable.

Evaluator prompts can only contain the following root input variables:

input: the input to the target you are evaluatingoutput: the output of the target you are evaluating

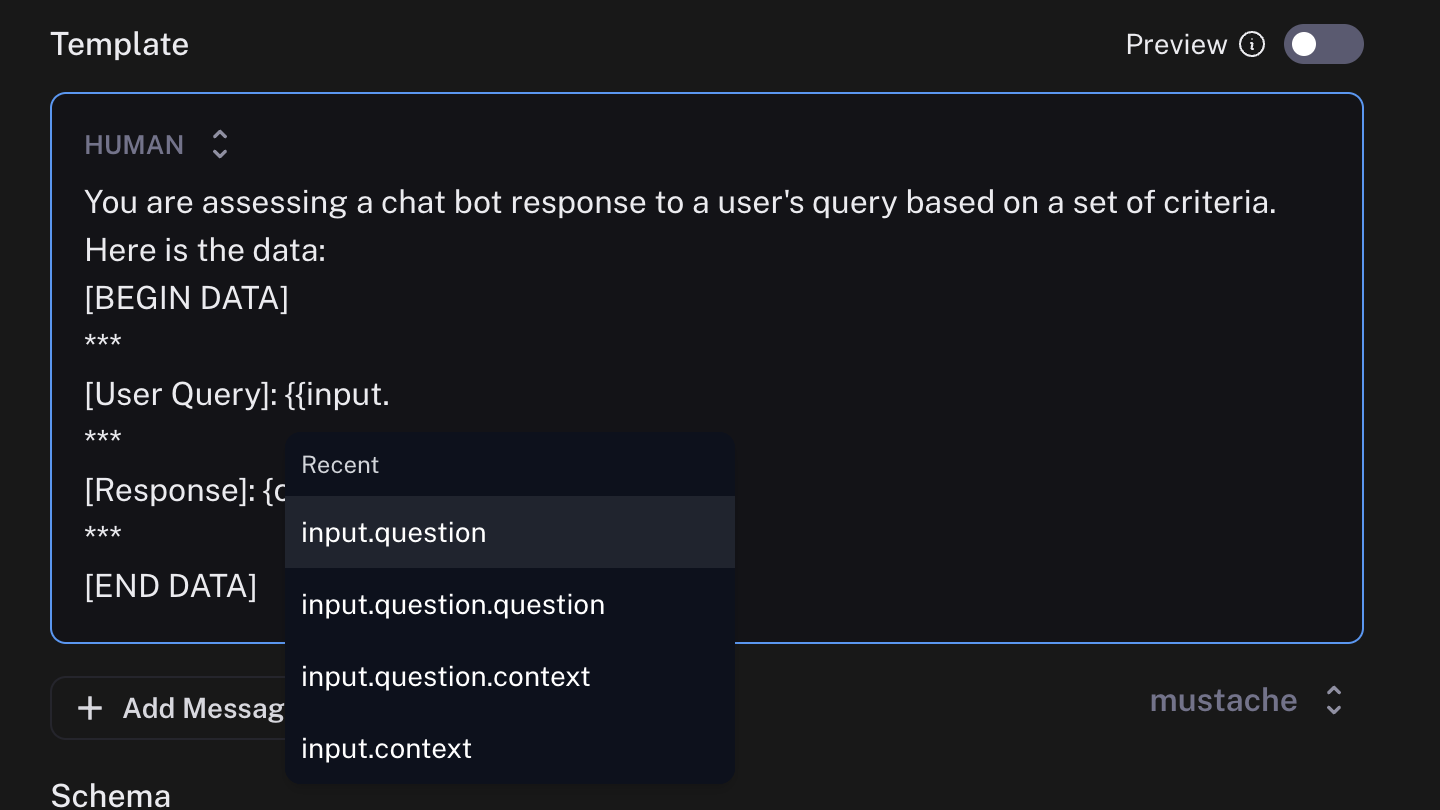

In mustache templating, you can get more specific. For example, if you have a key text in the input dictionary, you can access it using {{input.text}}. When writing your prompt, you'll be shown suggestions based on your recent run schema.

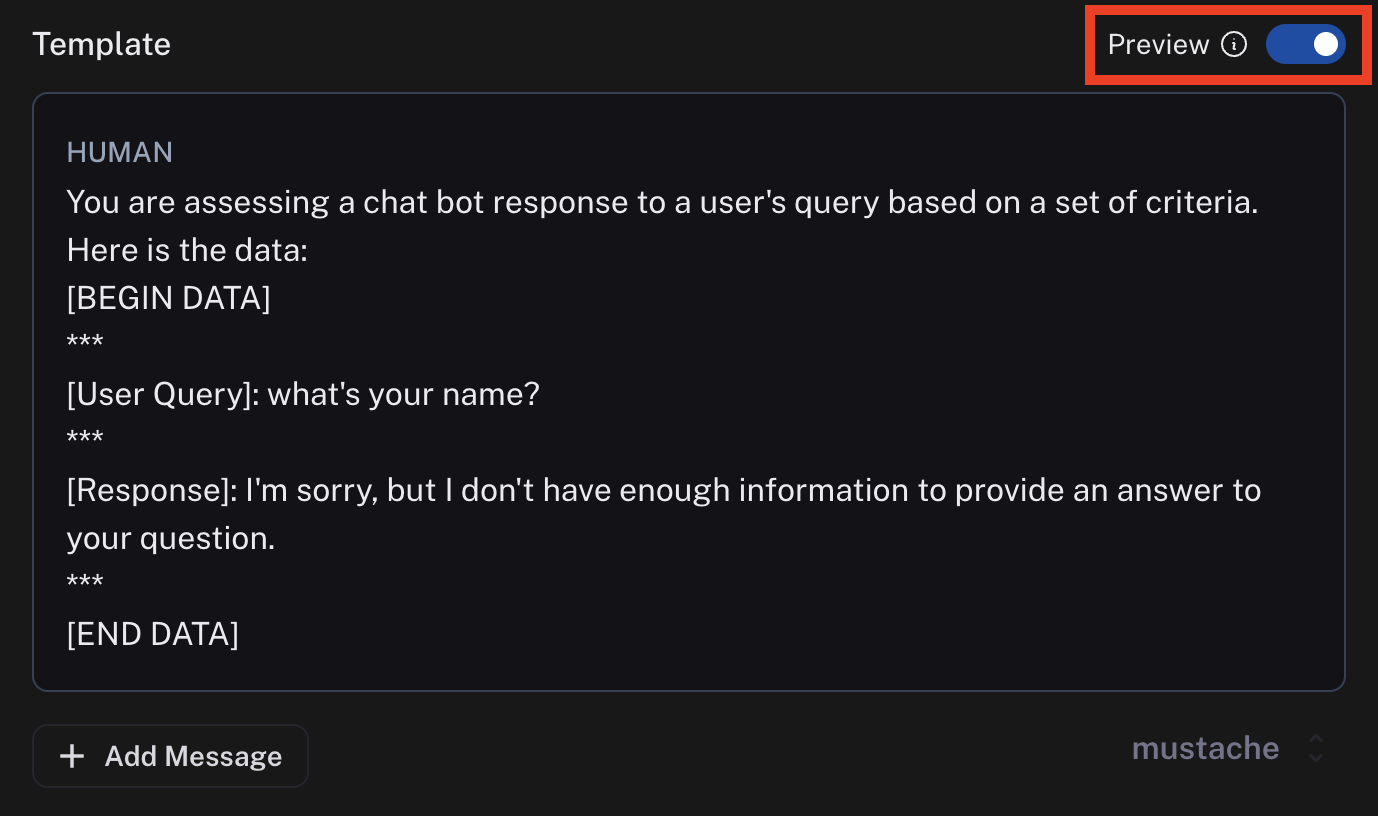

Previewing the prompt will show you an example of what the formatted prompt will look like. This preview pulls the input and output of the most recent run.

You can configure an evaluation prompt that doesn't match the schema of your recent runs, but the dropdown suggestions and preview function won't work as expected.

Pull a prompt from the LangChain Hub

You can also pull a prompt from the LangChain Hub. You can pull any public structured prompt.

You can't edit these prompts directly within the prompt editor, but you can view the prompt and the schema it uses. If the prompt is your own, you can edit it in the playground and commit the version. If the prompt is someone else's, you can fork their prompt, make your edits in the playground and then pull in your new prompt.

Criteria

An evaluator will attach arbitrary metadata tags to a run. These tags will have a name and a value. You can configure this in the Criteria section.

The names and the descriptions of the fields will be passed in to the prompt. Behind the scenes, we use tool calling to coerce the output of the LLM in to the score you specify.

The name of the criteria cannot have spaces since it is used as the name of a tool.

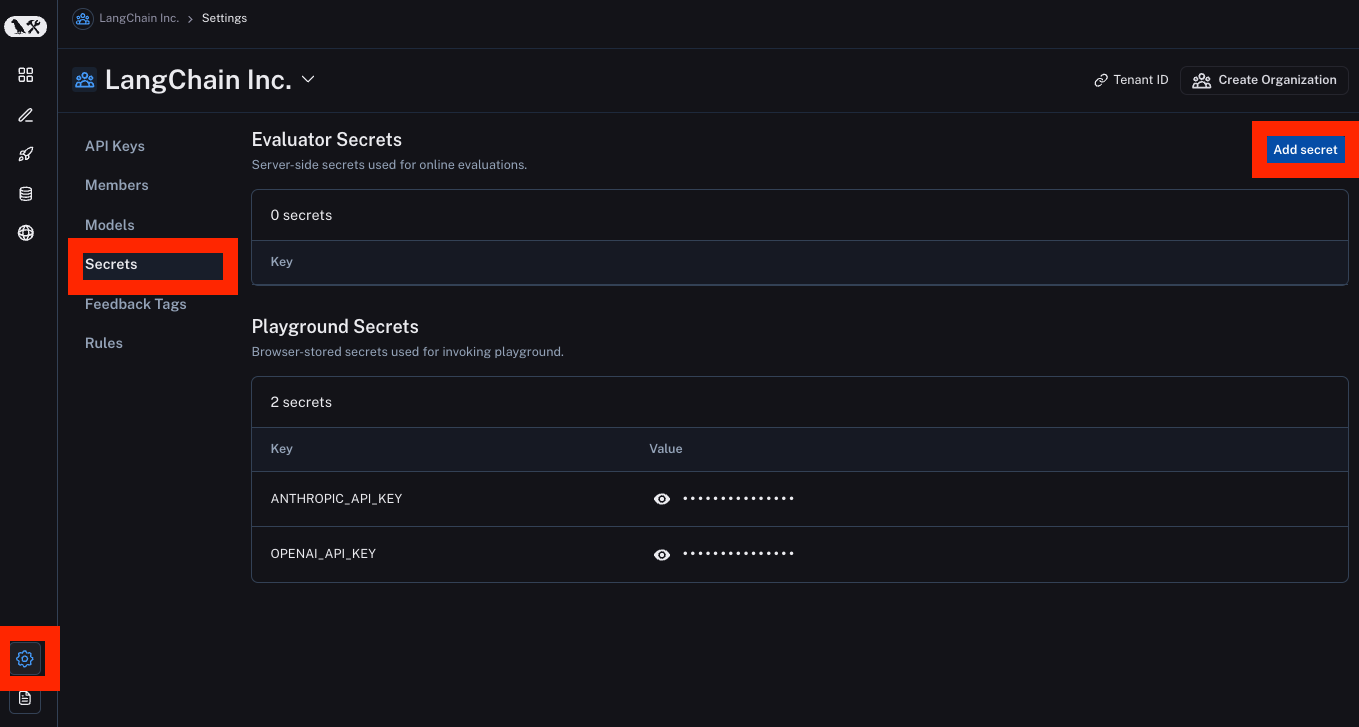

Set API keys

Online evaluation uses LLM-as-a-judge evaluation. In order to set the API keys to use for these invocations, navigate to the Settings -> Secrets -> Add secret page and add any API keys there.