Trace with LangChain (Python and JS/TS)

LangSmith integrates seamlessly with LangChain (Python and JS), the popular open-source framework for building LLM applications.

Installation

Install the core library and the OpenAI integration for Python and JS (we use the OpenAI integration for the code snippets below).

For a full list of packages available, see the LangChain Python docs and LangChain JS docs.

- pip

- yarn

- npm

- pnpm

pip install langchain_openai langchain_core

yarn add @langchain/openai @langchain/core

npm install @langchain/openai @langchain/core

pnpm add @langchain/openai @langchain/core

Quick start

1. Configure your environment

export LANGCHAIN_TRACING_V2=true

export LANGCHAIN_API_KEY=<your-api-key>

# The below examples use the OpenAI API, so you will need

export OPENAI_API_KEY=<your-openai-api-key>

2. Log a trace

No extra code is needed to log a trace to LangSmith. Just run your LangChain code as you normally would.

- Python

- TypeScript

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant. Please respond to the user's request only based on the given context."),

("user", "Question: {question}\nContext: {context}")

])

model = ChatOpenAI(model="gpt-3.5-turbo")

output_parser = StrOutputParser()

chain = prompt | model | output_parser

question = "Can you summarize this morning's meetings?"

context = "During this morning's meeting, we solved all world conflict."

chain.invoke({"question": question, "context": context})

import { ChatOpenAI } from "@langchain/openai";

import { ChatPromptTemplate } from "@langchain/core/prompts";

import { StringOutputParser } from "@langchain/core/output_parsers";

const prompt = ChatPromptTemplate.fromMessages([

["system", "You are a helpful assistant. Please respond to the user's request only based on the given context."],

["user", "Question: {question}\nContext: {context}"],

]);

const model = new ChatOpenAI({ modelName: "gpt-3.5-turbo" });

const outputParser = new StringOutputParser();

const chain = prompt.pipe(model).pipe(outputParser);

const question = "Can you summarize this morning's meetings?"

const context = "During this morning's meeting, we solved all world conflict."

await chain.invoke({ question: question, context: context });

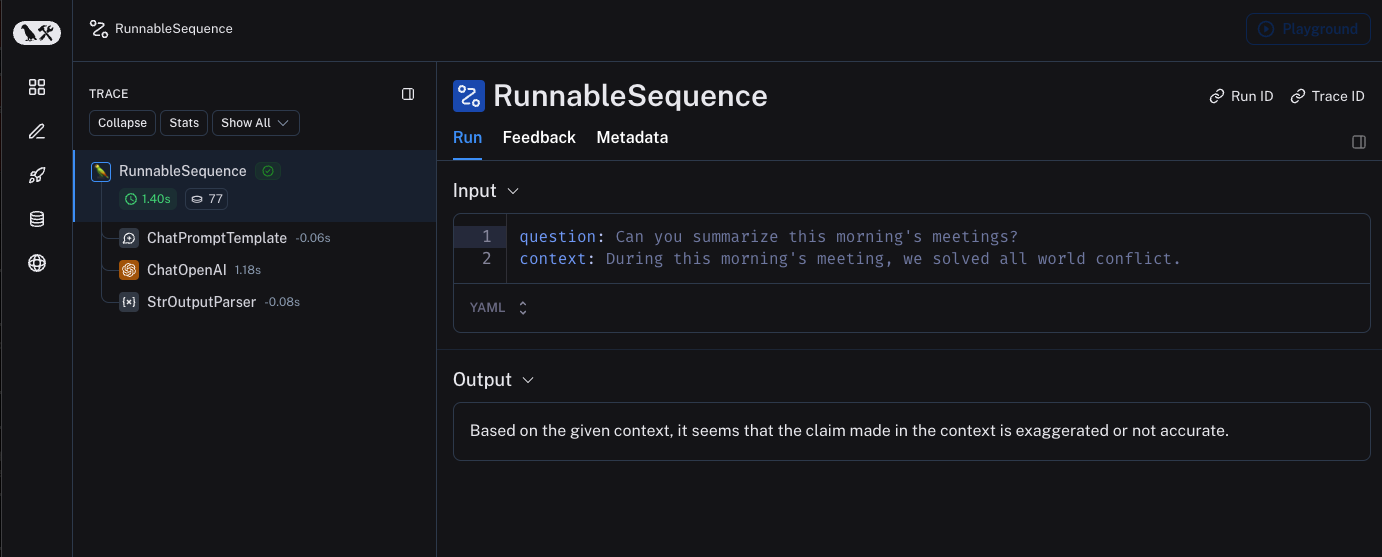

3. View your trace

By default, the trace will be logged to the project with the name default. An example of a trace logged using the above code is made public and can be viewed here.

Trace selectively

The previous section showed how to trace all invocations of a LangChain runnables within your applications by setting a single environment variable. While this is a convenient way to get started, you may want to trace only specific invocations or parts of your application.

There are two ways to do this in Python: by manually passing in a LangChainTracer (reference docs) instance as a callback, or by using the tracing_v2_enabled context manager (reference docs).

In JS/TS, you can pass a LangChainTracer (reference docs) instance as a callback.

- Python

- TypeScript

# You can configure a LangChainTracer instance to trace a specific invocation.

from langchain.callbacks.tracers import LangChainTracer

tracer = LangChainTracer()

chain.invoke({"question": "Am I using a callback?", "context": "I'm using a callback"}, config={"callbacks": [tracer]})

# LangChain Python also supports a context manager for tracing a specific block of code.

from langchain_core.tracers.context import tracing_v2_enabled

with tracing_v2_enabled():

chain.invoke({"question": "Am I using a context manager?", "context": "I'm using a context manager"})

# This will NOT be traced (assuming LANGCHAIN_TRACING_V2 is not set)

chain.invoke({"question": "Am I being traced?", "context": "I'm not being traced"})

// You can configure a LangChainTracer instance to trace a specific invocation.

import { LangChainTracer } from "@langchain/core/tracers/tracer_langchain";

const tracer = new LangChainTracer();

await chain.invoke(

{

question: "Am I using a callback?",

context: "I'm using a callback"

},

{ callbacks: [tracer] }

);

Log to a specific project

Statically

As mentioned in the tracing conceptual guide LangSmith uses the concept of a Project to group traces. If left unspecified, the tracer project is set to default. You can set the LANGCHAIN_PROJECT environment variable to configure a custom project name for an entire application run. This should be done before executing your application.

export LANGCHAIN_PROJECT=my-project

Dynamically

This largely builds off of the previous section and allows you to set the project name for a specific LangChainTracer instance or as parameters to the tracing_v2_enabled context manager in Python.

- Python

- TypeScript

# You can set the project name for a specific tracer instance:

from langchain.callbacks.tracers import LangChainTracer

tracer = LangChainTracer(project_name="My Project")

chain.invoke({"question": "Am I using a callback?", "context": "I'm using a callback"}, config={"callbacks": [tracer]})

# You can set the project name using the project_name parameter.

from langchain_core.tracers.context import tracing_v2_enabled

with tracing_v2_enabled(project_name="My Project"):

chain.invoke({"question": "Am I using a context manager?", "context": "I'm using a context manager"})

// You can set the project name for a specific tracer instance:

import { LangChainTracer } from "@langchain/core/tracers/tracer_langchain";

const tracer = new LangChainTracer({ projectName: "My Project" });

await chain.invoke(

{

question: "Am I using a callback?",

context: "I'm using a callback"

},

{ callbacks: [tracer] }

);

Add metadata and tags to traces

LangSmith supports sending arbitrary metadata and tags along with traces. This is useful for associating additional information with a trace, such as the environment in which it was executed, or the user who initiated it. For information on how to query traces and runs by metadata and tags, see this guide

When you attach metadata or tags to a runnable (either through the RunnableConfig or at runtime with invocation params), they are inherited by all child runnables of that runnable.

- Python

- TypeScript

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful AI."),

("user", "{input}")

])

# The tag "model-tag" and metadata {"model-key": "model-value"} will be attached to the ChatOpenAI run only

chat_model = ChatOpenAI().with_config({"tags": ["model-tag"], "metadata": {"model-key": "model-value"}})

output_parser = StrOutputParser()

# Tags and metadata can be configured with RunnableConfig

chain = (prompt | chat_model | output_parser).with_config({"tags": ["config-tag"], "metadata": {"config-key": "config-value"}})

# Tags and metadata can also be passed at runtime

chain.invoke({"input": "What is the meaning of life?"}, {"tags": ["invoke-tag"], "metadata": {"invoke-key": "invoke-value"}})

import { ChatOpenAI } from "@langchain/openai";

import { ChatPromptTemplate } from "@langchain/core/prompts";

import { StringOutputParser } from "@langchain/core/output_parsers";

const prompt = ChatPromptTemplate.fromMessages([

["system", "You are a helpful AI."],

["user", "{input}"]

])

// The tag "model-tag" and metadata {"model-key": "model-value"} will be attached to the ChatOpenAI run only

const model = new ChatOpenAI().withConfig({ tags: ["model-tag"], metadata: { "model-key": "model-value" } });

const outputParser = new StringOutputParser();

// Tags and metadata can be configured with RunnableConfig

const chain = (prompt.pipe(model).pipe(outputParser)).withConfig({"tags": ["config-tag"], "metadata": {"config-key": "top-level-value"}});

// Tags and metadata can also be passed at runtime

await chain.invoke({input: "What is the meaning of life?"}, {tags: ["invoke-tag"], metadata: {"invoke-key": "invoke-value"}})

Customize run name

When you create a run, you can specify a name for the run. This name is used to identify the run in LangSmith and can be used to filter and group runs. The name is also used as the title of the run in the LangSmith UI.

This can be done by setting a run_name in the RunnableConfig object at construction or by passing a run_name in the invocation parameters in JS/TS.

This feature is not currently supported directly for LLM objects.

- Python

- TypeScript

# When tracing within LangChain, run names default to the class name of the traced object (e.g., 'ChatOpenAI').

configured_chain = chain.with_config({"run_name": "MyCustomChain"})

configured_chain.invoke({"input": "What is the meaning of life?"})

# You can also configure the run name at invocation time, like below

chain.invoke({"input": "What is the meaning of life?"}, {"run_name": "MyCustomChain"})

// When tracing within LangChain, run names default to the class name of the traced object (e.g., 'ChatOpenAI').

const configuredChain = chain.withConfig({ runName: "MyCustomChain" });

await configuredChain.invoke({ input: "What is the meaning of life?" });

// You can also configure the run name at invocation time, like below

await chain.invoke({ input: "What is the meaning of life?" }, {runName: "MyCustomChain"})

Access run (span) ID for LangChain invocations

When you invoke a LangChain object, you can access the run ID of the invocation. This run ID can be used to query the run in LangSmith.

In Python, you can use the collect_runs context manager to access the run ID.

In JS/TS, you can use a RunCollectorCallbackHandler instance to access the run ID.

- Python

- TypeScript

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

from langchain_core.tracers.context import collect_runs

prompt = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant. Please respond to the user's request only based on the given context."),

("user", "Question: {question}\n\nContext: {context}")

])

model = ChatOpenAI(model="gpt-3.5-turbo")

output_parser = StrOutputParser()

chain = prompt | model | output_parser

question = "Can you summarize this morning's meetings?"

context = "During this morning's meeting, we solved all world conflict."

with collect_runs() as cb:

result = chain.invoke({"question": question, "context": context})

# Get the root run id

run_id = cb.traced_runs[0].id

print(run_id)

import { ChatOpenAI } from "@langchain/openai";

import { ChatPromptTemplate } from "@langchain/core/prompts";

import { StringOutputParser } from "@langchain/core/output_parsers";

import { RunCollectorCallbackHandler } from "@langchain/core/tracers/run_collector";

const prompt = ChatPromptTemplate.fromMessages([

["system", "You are a helpful assistant. Please respond to the user's request only based on the given context."],

["user", "Question: {question\n\nContext: {context}"],

]);

const model = new ChatOpenAI({ modelName: "gpt-3.5-turbo" });

const outputParser = new StringOutputParser();

const chain = prompt.pipe(model).pipe(outputParser);

const runCollector = new RunCollectorCallbackHandler();

const question = "Can you summarize this morning's meetings?"

const context = "During this morning's meeting, we solved all world conflict."

await chain.invoke(

{ question: question, context: context },

{ callbacks: [runCollector] }

);

const runId = runCollector.tracedRuns[0].id;

console.log(runId);

Ensure all traces are submitted before exiting

In LangChain Python, LangSmith's tracing is done in a background thread to avoid obstructing your production application. This means that your process may end before all traces are successfully posted to LangSmith. This is especially prevalent in a serverless environment, where your VM may be terminated immediately once your chain or agent completes.

In LangChain JS/TS, the default is to block for a short period of time for the trace to finish due to the greater popularity of serverless environments. You can make callbacks asynchronous by setting the LANGCHAIN_CALLBACKS_BACKGROUND environment variable to "true".

For both languages, LangChain exposes methods to wait for traces to be submitted before exiting your application. Below is an example:

- Python

- TypeScript

from langchain_openai import ChatOpenAI

from langchain_core.tracers.langchain import wait_for_all_tracers

llm = ChatOpenAI()

try:

llm.invoke("Hello, World!")

finally:

wait_for_all_tracers()

import { ChatOpenAI } from "@langchain/openai";

import { awaitAllCallbacks } from "@langchain/core/callbacks/promises";

try {

const llm = new ChatOpenAI();

const response = await llm.invoke("Hello, World!");

} catch (e) {

// handle error

} finally {

await awaitAllCallbacks();

}

Trace without setting environment variables

As mentioned in other guides, the following environment variables allow you to configure tracing enabled, the api endpoint, the api key, and the tracing project:

LANGCHAIN_TRACING_V2LANGCHAIN_API_KEYLANGCHAIN_ENDPOINTLANGCHAIN_PROJECT

However, in some environments, it is not possible to set environment variables. In these cases, you can set the tracing configuration programmatically.

This largely builds off of the previous section.

- Python

- TypeScript

from langchain.callbacks.tracers import LangChainTracer

from langsmith import Client

# You can create a client instance with an api key and api url

client = Client(

api_key="YOUR_API_KEY", # This can be retrieved from a secrets manager

api_url="https://api.smith.langchain.com", # Update appropriately for self-hosted installations

)

# You can pass the client and project_name to the LangChainTracer instance

tracer = LangChainTracer(client=client, project_name="test-no-env")

chain.invoke({"question": "Am I using a callback?", "context": "I'm using a callback"}, config={"callbacks": [tracer]})

# LangChain Python also supports a context manager which allows passing the client and project_name

from langchain_core.tracers.context import tracing_v2_enabled

with tracing_v2_enabled(client=client, project_name="test-no-env"):

chain.invoke({"question": "Am I using a context manager?", "context": "I'm using a context manager"})

import { LangChainTracer } from "@langchain/core/tracers/tracer_langchain";

import { Client } from "langsmith";

// You can create a client instance with an api key and api url

const client = new Client(

{

apiKey: "YOUR_API_KEY",

apiUrl: "https://api.smith.langchain.com",

}

);

// You can pass the client and project_name to the LangChainTracer instance

const tracer = new LangChainTracer({client, projectName: "test-no-env"});

await chain.invoke(

{

question: "Am I using a callback?",

context: "I'm using a callback",

},

{ callbacks: [tracer] }

);

Interoperability between LangChain.JS and LangSmith SDK

Tracing LangChain objects inside traceable (JS only)

Starting with langchain@0.2.x, LangChain objects are traced automatically when used inside @traceable functions, inheriting the client, tags, metadata and project name of the traceable function.

For older versions of LangChain below 0.2.x, you will need to manually pass an instance LangChainTracer created from the tracing context found in @traceable.

import { ChatOpenAI } from "@langchain/openai";

import { ChatPromptTemplate } from "@langchain/core/prompts";

import { StringOutputParser } from "@langchain/core/output_parsers";

import { getLangchainCallbacks } from "langsmith/langchain";

const prompt = ChatPromptTemplate.fromMessages([

[

"system",

"You are a helpful assistant. Please respond to the user's request only based on the given context.",

],

["user", "Question: {question}\nContext: {context}"],

]);

const model = new ChatOpenAI({ modelName: "gpt-3.5-turbo" });

const outputParser = new StringOutputParser();

const chain = prompt.pipe(model).pipe(outputParser);

const main = traceable(

async (input: { question: string; context: string }) => {

// this is done automatically in `langchain` >0.2.x

// only do this if you are using an older version of `langchain`

const callbacks = await getLangchainCallbacks();

const response = await chain.invoke({ callbacks });

return response;

},

{ name: "main" }

);

Manually tracing LangChain child runs via traceable / RunTree API (JS only)

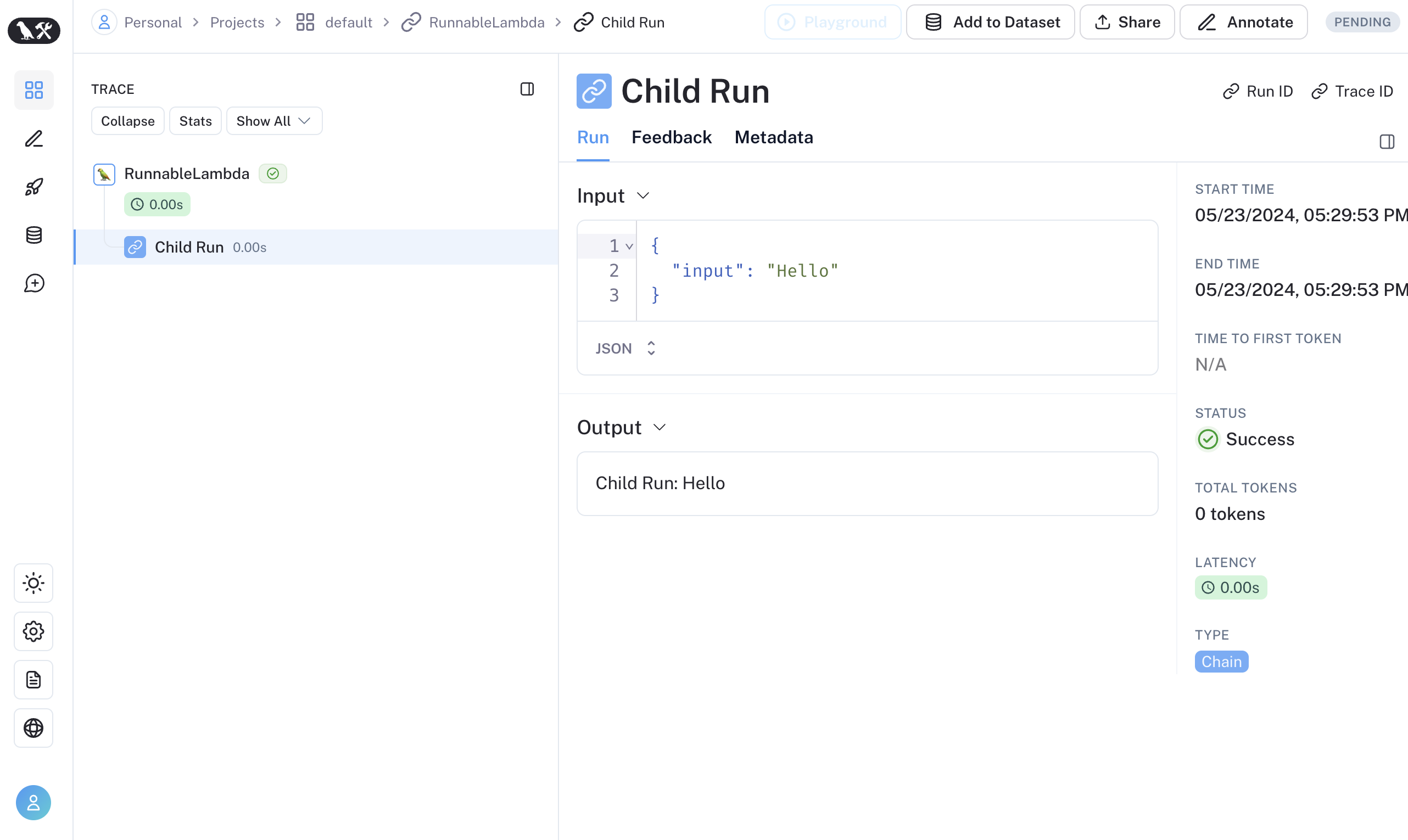

In some uses cases, you might want to run traceable functions as part of the RunnableSequence or trace child runs of LangChain run imperatively via the RunTree API.

You can convert the existing LangChain RunnableConfig to a equivalent RunTree object by using RunTree.fromRunnableConfig or you can pass the RunnableConfig as the first argument of the wrapped function, which will result in the following trace tree:

- Traceable

- Run Tree

import { traceable } from "langsmith/traceable";

import { RunnableLambda } from "@langchain/core/runnables";

import { RunnableConfig } from "@langchain/core/runnables";

const tracedChild = traceable((input: string) => `Child Run: ${input}`, {

name: "Child Run",

});

const parrot = new RunnableLambda({

func: async (input: { text: string }, config?: RunnableConfig) => {

// Pass the config to existing traceable function

await tracedChild(config, input.text);

return input.text;

},

});

import { RunTree } from "langsmith/run_trees";

import { RunnableLambda } from "@langchain/core/runnables";

import { RunnableConfig } from "@langchain/core/runnables";

const parrot = new RunnableLambda({

func: async (input: { text: string }, config?: RunnableConfig) => {

// create the RunTree from the RunnableConfig of the RunnableLambda

const childRunTree = RunTree.fromRunnableConfig(config, {

name: "Child Run",

});

childRunTree.inputs = { input: input.text };

await childRunTree.postRun();

childRunTree.outputs = { output: `Child Run: ${input.text}` };

await childRunTree.patchRun();

return input.text;

},

});